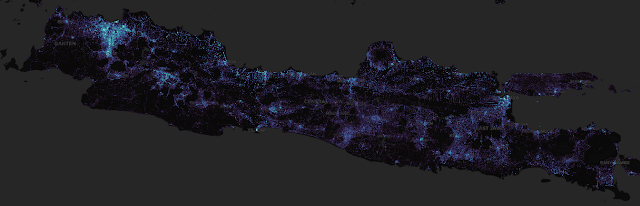

In the previous post I discussed how to download bulding footprints data from Google and Microsoft that cover the whole buildings footprint in the world. The next thing that came later was how to visualize such the big data with the size of several Gigabyte or even larger in my small machine. For example I downloaded Microsoft building footprints for Indonesia region with the size of 20.7 GB. Loading such extensive data into QGIS didn't work and made it crashed. Finally I can visualize the whole buildings in Indonesia with Python as in the figure 1 which focuses on the subset for Java Island only.

|

|

Figure 1. Building Density Java-Indonesia |

If you find yourself facing challenges in viewing large geospatial data

after downloading it, you're not alone. This post is tailored to address

such situations. In this tutorial, I will guide you through the process of

visualizing extensive data using Python. By the end of this tutorial, you

should be able to visualize your own large-scale geospatial data.

Importing Librabry

We start this tutorial by importing some libraries such as datashader, geopandas and colorcet. Datashader is a main library in this tutorial that visualize big data in three steps: Projection, aggregation and transformation. The ouput is a raster or image that visualize the aggregation of data into each pixel of the image. Transforming a large data into a raster format will extremely reduce the size of data that can be viewed much more faster.

Geopandas in a Python library that is used to handle spatial data in Python.

Basically it is used for input/output spatial data, spatial processing and

analysis. Lastly the colorcet library is used for colormap.

To import the libraries can be done using the following code.

1 2 3 |

import datashader as ds, datashader.transfer_functions as tf import geopandas as gpd import colorcet as cc |

Reading Spatial Data

After importing all required libraries, let's get and read the data. For

this tutorial I used

Microsoft roads dataset. The road data was mined from Bing Maps imagery between 2020 and 2022

including Maxar and Airbus. The data cover all around the world with the

total length of 48.9 million KM. It's free to download with

Open Data Commons Open Database License (ODbL). For another sample dataset can be found from

geoparquet

website.

To read the data, we can use geopandas read_parquet() function as

below.

road_df=gpd.read_parquet('Roads.parquet')

The data will be loaded as a dataframe. Therefore you can see the data rows

quickly as seen in the figure 2 with dataframe head() method.

Furthermore you can also get another info about the data with

info() or describe() method.

road_df.head()

|

|

Figure 2. Roads Dataframe |

Creating Canvas and Aggregate Data

Before rendering the data, we need to create a canvas first. The following code

is used to create a canvas in 500 pixels wide and 400 pixels tall.

canvas = ds.Canvas(plot_width=500, plot_height=400) agg = canvas.line(road_df,geometry='geometry', agg=ds.count())

In the next line, we're using the line() method of the canvas object

to render lines from a DataFrame called road_df. Let's break down the

parameters:

- road_df: This is the DataFrame containing the data we want to render.

- geometry='geometry': This parameter specifies the column in the dataframe that contains the geometrical information (e.g., coordinates) for the lines to draw.

- agg=ds.count(): This parameter specifies how we want to aggregate the data. In this case, I used ds.count() to count occurrences of the data in the respected pixel.

So, the agg object will contain a representation of the lines from road_df aggregated onto the canvas, where each pixel represents the count of lines that overlap at that pixel. This aggregated representation can then be visualized using various plotting libraries.

Rendering The Data with Datashader

After aggregating the data, now let's visualize the data. For that we need

to create a variable that contains the datashader transfer function with

some defined variables as shown in the following code.

img = tf.shade(agg.where(agg>10),cmap=cc.fire, how="eq_hist") tf.set_background(img,"black")

Let's me explain those variables:

- agg.where(agg > 10): This filters the aggregated data (agg) to keep only the values where the count is greater than 10. This is a threshold to visualize only areas with a higher density of lines.

-

cmap=cc.fire: This parameter specifies the colormap to use for

coloring the data. In this case, I used the 'fire' colormap from the

Colorcet library. You can find the other colormap's name in the

Colorcet documentation.

- how="eq_hist": This parameter specifies the histogram equalization method for mapping the colors. Histogram equalization enhances the contrast in the image by stretching out the intensity range.

In the next line we're setting the background color of the image to black using the set_background() function from the transfer function module.

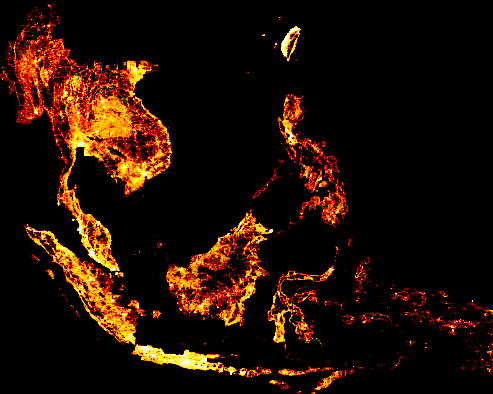

After running the code, the image will look like as in figure 3.

|

|

Figure 3. South East Asia Roads |

Viewing Certain Part of the Data

Great! We have made a road visualization for South East Asia from a big

dataset. What about if we want to view certain part of the data for example a specific region or country? For this purpose we can define x_range, and

y_range parameters in the canvas method. These parameters consist of minimum

and maximum coordinates. For example I want to view the road for Philippines

with min,max longitude(x) 119.756,126.726 and min,max latitude(y) 5.099,19.069

as in the following code.

1 2 3 4 5 |

ph=((119.756,126.726),(5.099,19.069)) canvas_ph = ds.Canvas(plot_width=300, plot_height=400,x_range=ph[0],y_range=ph[1]) agg = canvas_ph.line(road_df,geometry='geometry', agg=ds.count()) img = tf.shade(agg,cmap=cc.fire, how="eq_hist") tf.set_background(img,"black") |

After running the code it will return an image that shows the road for

Philippines as in figure 4.

|

| Figure 4. Philippines Roads |

Playing More with Visualization

Let's play more with visualization. Let's say we want to highlight the most

road density. For that I created ten classes gray color map as in line 4 in

the code below. After that I added a 'red' color into the colormap list cause

I want to highlight the last 10% of the data with the red color. The result

can be seen in the figure 5.

1 2 3 4 5 6 7 |

import numpy as np canvas_ph = ds.Canvas(plot_width=300, plot_height=400,x_range=ph[0],y_range=ph[1]) agg = canvas_ph.line(road_df,geometry='geometry', agg=ds.count()) colormap = [(i,i,i) for i in np.linspace(0,255,9)] colormap += ["red"] img=tf.shade(agg, cmap = colormap, how='eq_hist') tf.set_background(img,"black") |

|

|

Figure 6. Philippines Road. The most Density Highlight in Red |

Exporting Data Visualization to An Image

After creating the nice visualization, of course we want to save it to local disk for another purpose like sharing to other people, combine with another information or to make the visualization more attractive to user. To get the image output we can export it into an image.

To export the visualization result into an image can be used the following code

1 2 3 4 |

from functools import partial from datashader.utils import export_image export = partial(export_image, background = "black", export_path="export") export(tf.shade(agg, cmap=colormap),"philippines roads") |

In summary, the code sets up a function for exporting images with specified

background color and export path, and then it exports a shaded image of

aggregated data with the specified colormap under the name "philippines

roads".

That's all tutorial on geospatial big data visualization with

Python. Throughout this tutorial, we learned how to read big data,

perform aggregation, and create visualizations using Datashader in Python.

Hopefully this tutorial useful. Thank you.